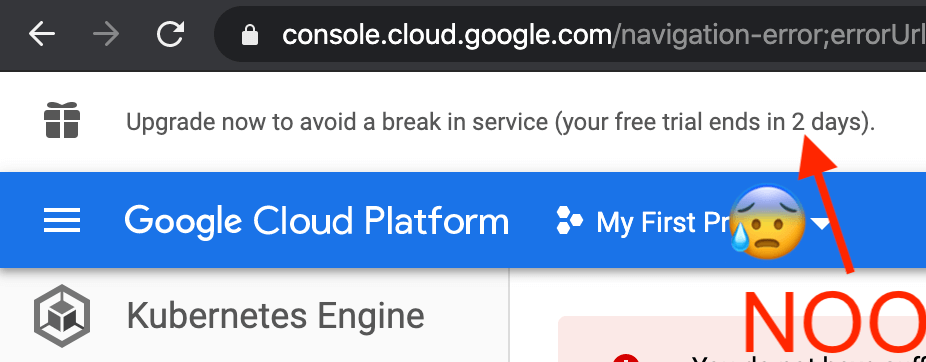

To learn and play with Kubernetes, I keep a “playground” cluster to try things on it (helm files I use are here). Since 2019, I have been using GKE (Google’s managed Kubernetes service), which works great with the one-year $300 credit that you get initially. A few days ago, reality hit me hard with this message:

I had only 2 days to find a plan and migrate everything away from GKE! My current setup was only using a single n1-standard-1 on us-west-1 and with no network load balancer. But that was still around €15 a month, and I just didn’t want to pay. I chose to migrate to Civo’s managed K3s since they are in beta and I really wanted to try K3s.

Civo’s Managed K3s

Civo is a company that offers a public cloud as well as managed Kubernetes clusters. Their managed Kubernetes offer, named “KUBE100”, is quite new (launched in mid-2019). Unlike most managed Kubernetes offerings like EKS or GKE, Civo went with Rancher’s K3s Kubernetes distribution.

Compared to the standard Kubernetes distribution, K3s is a way lighter: simple embedded database with sqlite, one single binary instead of four; a single VM can both host the control plane and run pods. With the traditional Kubernetes distribution, you have to run the control plane on a separate VM. Note that Civo has a whole blog post on “Kubernetes vs. K3s”.

This lightweight feature is what brings me here, and also the fact that K3s comes by default with Traefik & their own tiny Service type=LoadBalancer controller, which means you don’t even need an expensive network load balancer like on GKE when you want to expose a service to the internet.

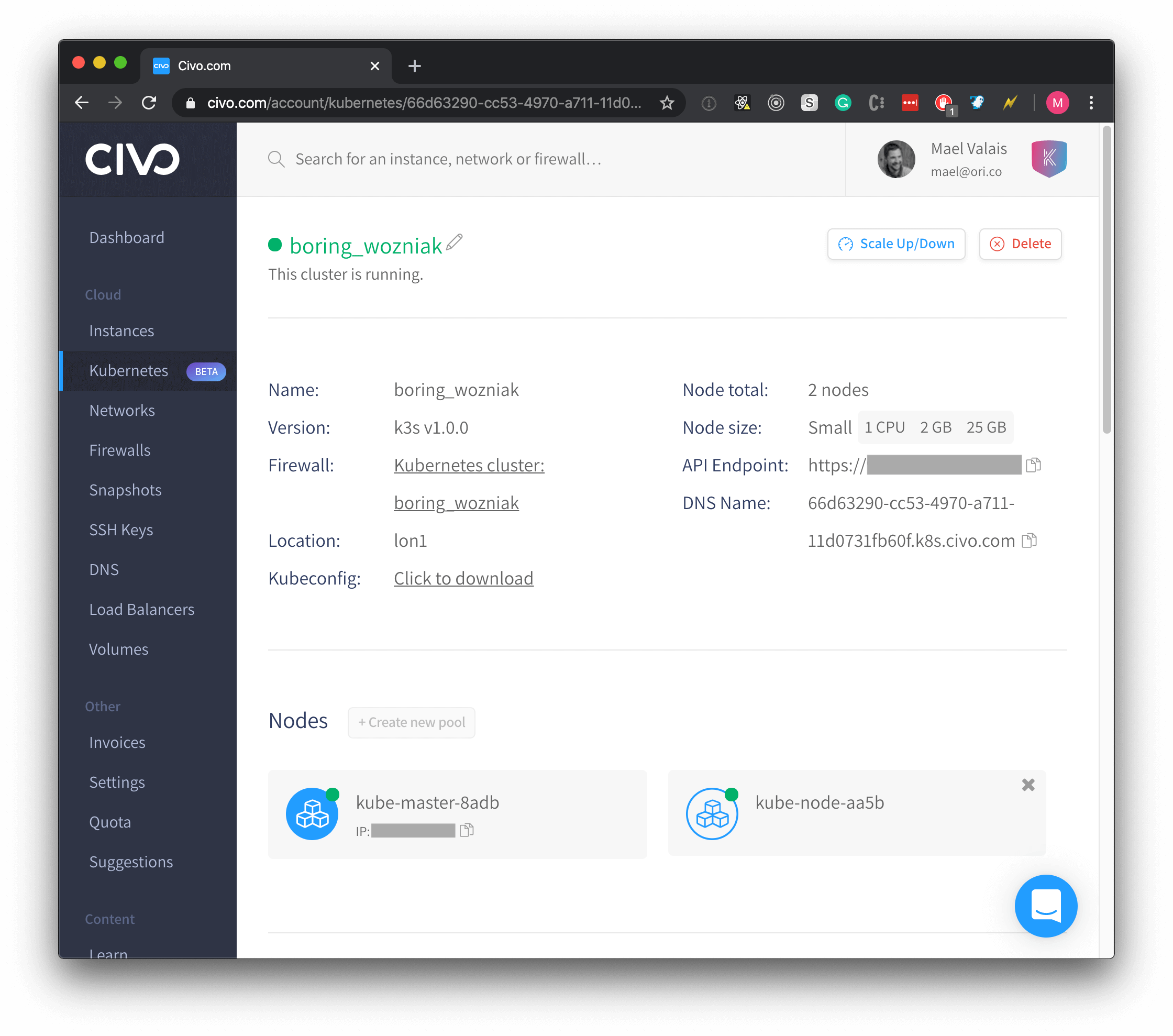

Of course, K3s has drawbacks and is probably meant for IoT-based clusters, but it’s also perfect for playing around with Kubernetes! So I went ahead and create a two-nodes cluster:

Note that since this is K3s, the master can also be scheduled with pods. So I could have gone with a single node.

Migrating ExternalDNS, cert-manager and Traefik

That’s the easy part. I just had to run ./helm_apply which runs helm upgrade --install for each helm chart I use.

In the process, I decided to go with *.k.maelvls.dev instead of *.kube.maelvls.dev. The shorter, the better! I forgot to add that I still use Google’s CloudDNS. I did not find an easy alternative yet. Maybe just Cloudflare for now?

After creating Traefik, I knew that its service would automatically be populated with the status.loadBalancer field thanks to K3s’ servicelb. Let’s see:

% kubectl -n traefik get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

traefik LoadBalancer 192.168.255.134 91.211.152.190 443:32164/TCP,80:32684/TCP

Great! Now, Traefik will propagate this external IP to the ingresses, and ExternalDNS will use the status.loadBalancer from these ingresses in order to set A records.

If you want to know more about how “servicelb” works, you can take a look at The Packet’s-Eye View of a Kubernetes Service where I describe how Akrobateo works (K3s’ servicelb has the exact same behavior).

Migrating MinIO from the old to the new cluster

I use minio for various uses. It is great if you want a S3-compatible storage solution. In order to migrate, I followed this. It’s quite painless:

$ kubectl -n minio run a --generator=run-pod/v1 -it --rm --restart=Never --image=alpine

% wget https://dl.minio.io/client/mc/release/linux-amd64/mc && install mc /usr/bin

% mc config host add old https://minio.kube.maelvls.dev AKIAIOSFODNN7EXAMPLE "wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY" --api S3v4

% mc config host add new http://minio:9000 AKIAIOSFODNN7EXAMPLE "wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY" --api S3v4

% mc ls old/ # List buckets since I had to create manually each bucket.

% mc mb new/bucket1 # Then create each bucket one by one.

% mc cp --recursive old/bucket1/ new/bucket1/

I also decided to change the access key & secret key. Again, quite painless. As mentioned in the documentation, I changed the secret stored in Kubernetes:

kubectl -n minio edit secret minio

and then I temporarily added the MINIO_ACCESS_KEY_OLD and MINIO_SECRET_KEY_OLD to the deployment by editing it.

kubectl -n minio edit deployment minio

After that, the pods get recreated, and MinIO picks up the new secret. Note: I also had to edit the deployment again in order to remove the temporary _OLD environment variables.

To recap, the whole migration was painless. The only data I migrated was MinIO. Note that I didn’t have any SLA to comply with, but if I had planned a bit better, I could have moved over with almost zero downtime.

In order to get almost-zero-downtime, I would have made sure to keep the old and new MinIO instances replicated until the move was over. The only problem with the whole migration is the DNS change. I cannot precisely know when the propagation will take. After completing the migration and assuming that all the DNS entries are propagated, if for some reason people keep hitting the old IP due to outdated DNS entries, the old and new clusters would have become out-of-sync. To mitigate that issue, I could have chosen to “cordon” the old cluster just to make sure that this case never happens.

The repo for my Kubernetes playground cluster (*.k.maelvls.dev) is available here.

- Update 7 May 2020: better introduction explaining why I use GKE, tell what Civo and K3s are all about.